A/B winner auto-selection: AI-led A/B testing to self-optimize customer journeys

Updated on Oct 21, 2021

Tackling unpredictable customer behavior

Building cross-channel marketing campaigns that maximize engagement, conversion, or retention is a science and an art. In essence, customer journey orchestration is about visualizing and accounting for the actions (and inactions) that customers take along the way.

The reality isn’t as simple, though! Your customers display inconsistent behavior. And, even the best-designed journeys might not be robust enough to counter this unpredictability.

Of course, AI-led Predictive Segments can offset some of this, but there’s a lot more to it.

Such as extensive testing of and experimentation with your:

- Channel mix

- Subject lines or campaign titles

- Call to action (CTAs)

- Send times

- Automated journeys

A/B Split testing is the secret sauce to optimizing your marketing automation strategy.

Our cross-channel journey builder—Architect—allows you to do that…and much more.

What is A/B split testing in Architect?

A/B Split Testing allows you to branch the flow of your journeys to compare the performance of two or more path variations. You can test the use of different channels, subject lines, and in-body content by randomly allocating defined audience percentages on each of these paths.

Depending on your specific business goal—be it increasing engagement rates, conversion rates, or revenues—you can then identify the path variation that performs best.

Based on performance, you can then edit your journey and optimize it further to keep hitting your business goal.

Sounds simple?

Imagine if you could make this entire exercise of testing and experimentation even simpler by reducing this manual effort!

Enter A/B Split Winner Auto-Selection.

What is A/B split winner auto-selection?

Think of this as our version of AI-powered A/B split or A/B/n testing. This helps you leverage machine learning algorithms to determine and direct customers to the winning path variation over a specified time duration, automatically.

Your winner auto-selection depends on two key variables that you define:

- Winning Metric: The metric optimized for your goal, for example, Conversion Rate or Revenues

- Calculation Duration: The time over which this metric is calculated and the winning path is announced, for example, 30 days.

With this feature, you no longer have to manually track the A/B split test results and make edits to your journey on-the-go. We do that for you.

Say hello to self-optimized customer journeys—with just a few clicks.

How does A/B split winner auto-selection work?

Here’s a step-by-step guide on how you can design and deploy frictionless journeys with this feature.

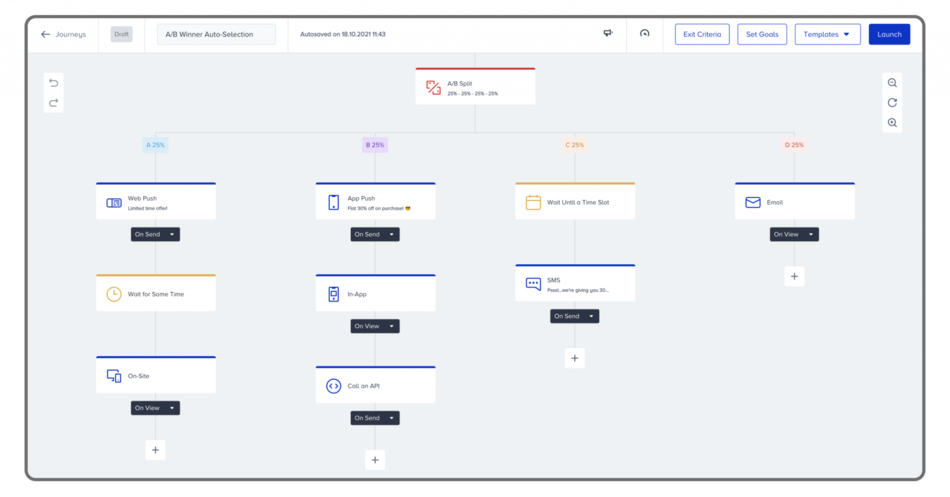

Let’s take the example of a fashion eCommerce brand looking to maximize conversion rates by offering a discount across four engagement channels on four path variations: Web Push Notifications, App Push Notifications, SMS, and Email. This journey and each path variation might look something like this our cross-channel journey orchestrator, Architect:

By enabling Winner Auto-Selection on this A/B/n test, you can move beyond manual A/B split testing and actually optimize for multiple elements in every path variation. In addition, you can:

- Determine the best channel, combination of channels, content, and offers that help you achieve your business goal.

- Identify the ideal communication frequency via the optimal wait time.

- Improve your metrics—based on your business goal—by optimizing entire journey flows automatically.

You no longer need to test each element if you allow our Machine Learning algorithms to auto-identify the winning journey path and direct customers along it.

How do you measure impact?

The proof of your journey optimization pudding lies in measuring the performance for each alternative path that you define.

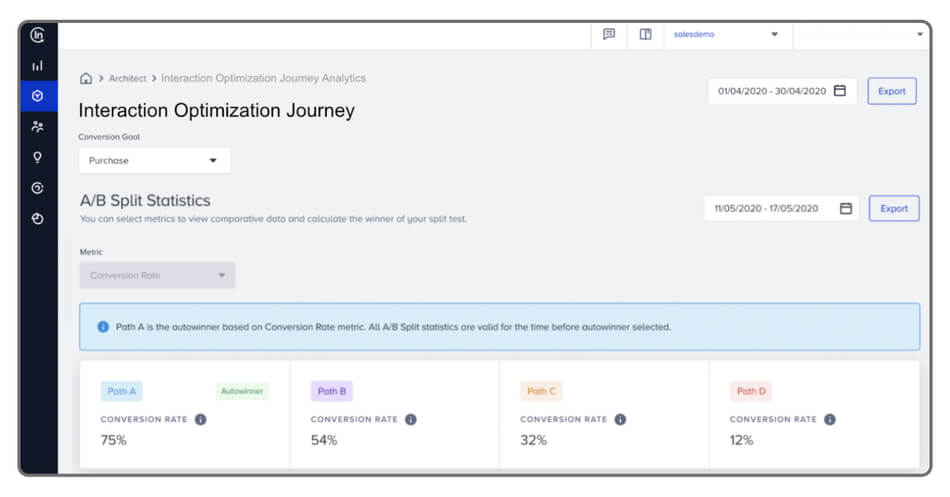

While you get a quick snapshot of the number of customers who arrive on each of these paths under the “A/B Split” element on the journey canvas, you can dive deeper into the analytics by clicking on “Go to Statistics”. This takes you to the “A/B Split Statistics” screen.

The “A/B Split Statistics” screen provides a complete view of the performance of each path—based on the pre-defined metric—with the auto-selected winning path clearly highlighted. In the above instance, Path A is clearly the winner.

The performance of the other paths gives you clarity on the chosen metric before A/B Split Winner Auto-Selection is enabled.

Why choose A/B split winner auto-selection?

Every A/B split test comes with a high time and monetary cost when manually gathering data and determining the winning variation. The opportunity cost incurred on directing customers to low-performing variations only takes you further away from hitting your business goals.

That’s not the case with AI-powered A/B testing. Its adaptive nature ensures that you don’t lose time or money while waiting to identify a winning variation. Our system automatically calculates and determines that for you, reducing manual intervention on your part exponentially.

To learn more about how you too can build, test, and launch self-optimizing, no-code cross-channel customer journeys with ease, get in touch with us today!